Will AI replace software engineers first?

Are software engineers uniquely susceptible to being replaced by AI? A discussion.

In a recent podcast, Ezra Klein of the New York Times had me questioning my career choice. There was a suggestion that coding tasks may have some unique traits which make them particularly open for disruption:

(...) , if you look at where people keep using it [AI] day after day, you’re looking at places where the product doesn’t need to be very good.

(...) It’s why it’s working pretty well for very low-level coding tasks. That kind of work doesn’t need to be very good. It gets checked and compiled, and so on.

So are the days of software engineers numbered? Are we more susceptible to disruption? Here are my thoughts on AI as it applies to the career of Software Engineering. The focus here largely being on generative AI like that found in products such as OpenAI's ChatGPT and Microsoft Copilot.

AI as a coding assistant

The most common use case for generative AI today is as an assistant. This is true for all domains, including software engineering.

Tools such as GitHub Copilot work inside an engineer's existing code editor to suggest and review code at a low-level. Existing low-code and no-code development tools such as Microsoft Power Platform, OutSystems and Salesforce have integrated natural language prompts into their tools allowing for application builders to describe how individual components or functions should behave – augmenting the previous building experience.

Here the scope is a single functional requirement, not an entire application or even an entire feature. The intent of the developer is clear and they're able to articulate this intent to the AI. There is limited room for misunderstanding. The AI does not have the context of the broader application or business problem – its scope is narrow. This is a big improvement over traditional coding accelerators and low/no-code tools, but the AI is still only working as directed, requiring the oversight of a human engineer. It's doing the "low-level coding" but not much else.

AI as a replacement

For AI to start replacing traditional software engineering roles then it will need to start delivering directly to business users, not just software engineers. Some recent demonstrations look to be exactly that.

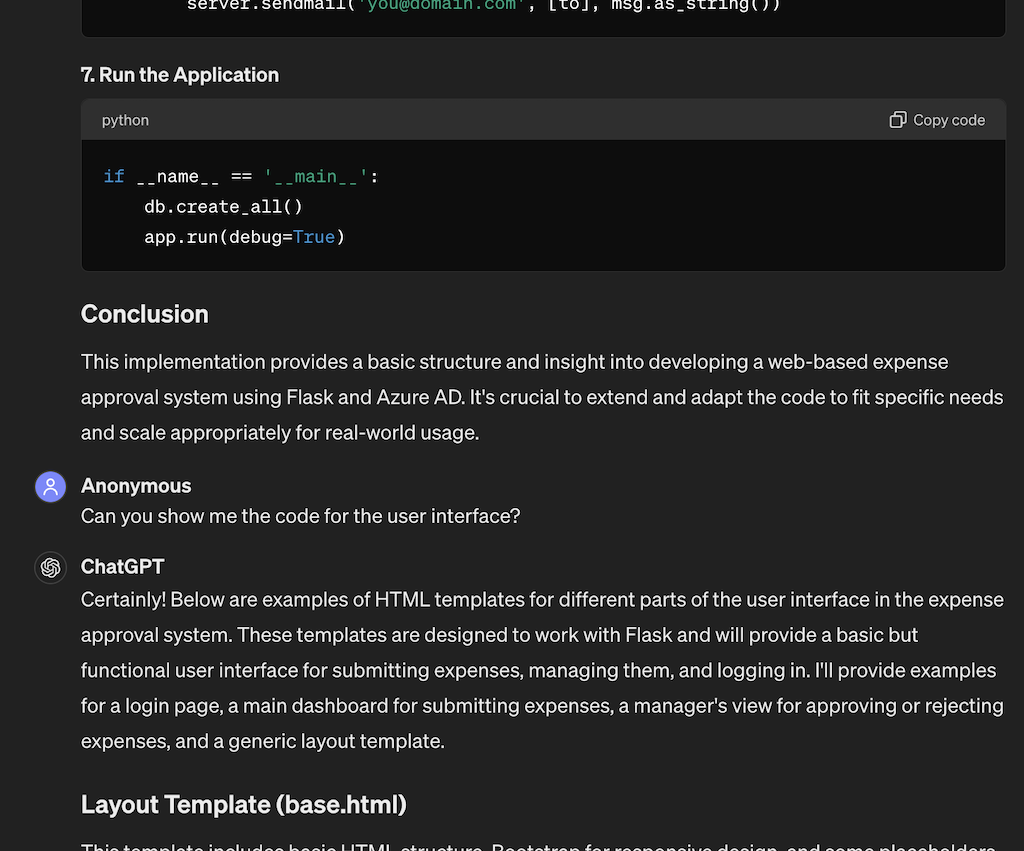

General large language models such as ChatGPT can address broader problems with some level of success. In this example it has a good attempt at building a simple marketing landing page. In this second example we provide some basic requirements for an expense approval system.

We've stretched it further and it is superficially more impressive, but the gaps also become more clear. Advanced technical knowledge is needed to guide the AI.

Vendors such as Wix have integrated AI into their website builders to let business users specify their website requirements in natural language. This includes the layout and visual design.

The term "AI Agent" is used when the AI is given further autonomy over outcomes and is more than a simple question/answer chatbot. SWE-Agent uses large language models to "fix bugs and issues in GitHub repositories" – a common task for software engineers. Devin.ai takes this one step further, advertising itself as "the first AI Software engineer". An impressive example of its abilities is "building and deploying apps end to end" where it can incrementally add features requested by the user. Hey, this sounds like what I do!

Devin creates an application incrementally in response to requests from a business user

These examples have a number of things in common which reflect the current state of generative AI:

- Non-trivial examples require skilled human oversight

AI works as directed – jumping to answers without asking clarification questions. In the ChatGPT example it is up to the user to specify the requirements. To its credit, it does suggest further avenues for exploration, but this is left for the user. ChatGPT can feel "lazy", requiring much prodding to get to a useful output. Devin.ai has a better attitude but suffers similar issues. Filling these gaps requires experienced human oversight. - AI autonomy is more achievable when the scope is narrow

The most successful examples are when the scope is narrow – the problem is well defined and the context is well known. The Wix example can work as the domain is restricted to a simple website. Under the hood there are likely only a few possible page layouts and colour schemes. The user's intent is well known – they want a simple content or commerce site. SWE-Agent can fix bugs on GitHub because the scope is limited and well defined by a human with technical specificity. It hasn't had to perform triage and communication with a business user.

What does a software engineer do?

Software engineers are responsible for developing solutions to business problems in collaboration with business stakeholders. Code is the output of this process, but writing code is not the most time consuming or demanding part of the process.

The difficulty in building software is the human-computer interface – converting business objectives and product ideas into requirements suitable for delivery. The natural language of business stakeholders (and everyone) is imprecise and subjective. Business users will usually describe the happy path, then what? The software engineer will be expected to seek clarification and propose options. Some examples:

- testing assumptions

"If we modified this business process to instead work this way then we would greatly simplify the solution and reduce costs." - what happens if things go wrong?

"What happens if the payment method is rejected?", "What if the user doesn't have a last name?" - integration and other technical limitations

"The other system doesn't use the same identifier, we will need to develop an approach for matching these records." - non-functional and compliance requirements

backup, security, maintainability and more... - weighing trade-offs

"What library/platform should we use?", "Should we build this as custom or look to an off-the-shelf solution?" - verification for correctness

does the software do what it's supposed to do?

Navigating this complexity with business stakeholders is a critical skill for software engineers – bringing experience from inside and outside the domain, converting imprecise requests into a form suitable for implementation in code.

What does this mean for Software Engineering?

It's true that AI is already pretty good at the "low-level coding tasks", but it's got quite a way to go in the other areas.

AI will be an accelerant - once you know and define the problem

Defining requirements with the precision required for implementation can be an incredibly difficult exercise. So much so in fact that industry has moved to methods such as Agile Scrum which emphasises collaboration and iteration over up-front formal documentation. On anything other than the most simple of systems, requirements require stakeholder negotiation and tradeoffs.

Transformer AI models provide a one-way conversation – they do not test assumptions, or expand thinking. They are reliant on the person feeding the model to be an expert and validate ideas – they are a junior assistant. Given a perfectly described problem they can code a reasonable solution, most of the time. Unfortunately, perfectly describing an entire enterprise software system is a practically impossible task. Documentation is never 100% comprehensive.

Human experience and intuition is still important

Once the requirements are known and the solution is built, how will you know that the solution correctly delivers on those requirements? Unfortunately this is already a difficult problem which is compounded by the deficiencies in transformer-based AI.

The problem of AI hallucination is well documented by now. If your requirements are unusual or unique to your industry or organisation then the chances of the AI introducing errors increases. If your scenario isn't sufficiently represented in the training data then the AI will do its best to make it up.

AI-agents don't know when to stop. They can be prone to drifting from their given goals with each successive step and getting into infinite loops. This isn't surprising as the concept of "done" or "good enough" can be subjective – particularly in an enterprise scenario. This pragmatism is arguably one of the most important engineering skills and is honed through experience.

If the AI outputs code we can easily test that it compiles but this gives no indication that the code delivers on the desired requirements. For this we need software verification including testing. AI is able to create test cases using the same requirements as used for the solution build. This is useful as an accelerator, but correctness is not guaranteed – here the AI is effectively marking its own homework.

But things are moving fast

Of course there are active efforts which may improve the ability of AI systems to better collaborate with business stakeholders and understand enterprise context. GitHub Copilot Workspace aims to expand the AI scope beyond a single file to the entire application. Similarly, Google's Gemini 1.5 AI model can consider extremely large amounts of context when presented with a problem – more than has been possible in other models to date. There is active research looking at ways for chat-based AI can reduce misunderstanding via clarification questioning – approximating the broader role of a software engineer.

I believe we will know in a relatively short period of time whether generative AI models will continue to improve using current approaches or whether further breakthroughs are necessary.

The opportunity

It feels like we're approaching or maybe even past peak AI hype. However unlike blockchain and metaverse before it, I believe it's clear that generative AI is going to have a long-lasting and continuing impact on our industry.

The broad set of applications for transformer AI technology makes a general assessment of the "success" or maturity of the technology virtually impossible. It will be good at some things and terrible at others. When this is combined with the literally unlimited promise of AI it will be especially challenging for technology leaders to identify which AI investments will prove valuable to their technology teams and organisations.

In the near term it's clear that AI's primary role in the software engineering domain will be as an assistant. This productivity boost will allow engineers to focus on the "high-value work" including interfacing with business stakeholders to better understand business challenges. They will then use their intuition and experience to guide AI-based tools to the desired outcomes.

Thankfully there's no apparent limit to this high-value work. As the AI boon itself is showing, there is an insatiable appetite for software, driven by technology developments which were unimaginable only five years ago. The majority of proven use cases for enterprise AI are firmly within the reach of generalist software engineers.

So we're all good then?

I don't believe generative AI presents any greater "threat" to software engineering than it does for most other careers. Low-level, monotonous work such as some coding is ripe for disruption – but that's the way it's always been and there's a seemingly unlimited supply of increasingly higher-valued work.

Successful software engineers require a broad set of communication, reasoning and critical thinking skills. By the time AI gains these skills the impacts will be felt by everyone, in all careers. Predictions vary greatly on if and when this might happen. In the meantime I'm going to enjoy being a software engineer.